TransFusion: Generating Long, High-Fidelity Time Series using Diffusion Models with Transformers

Abstract

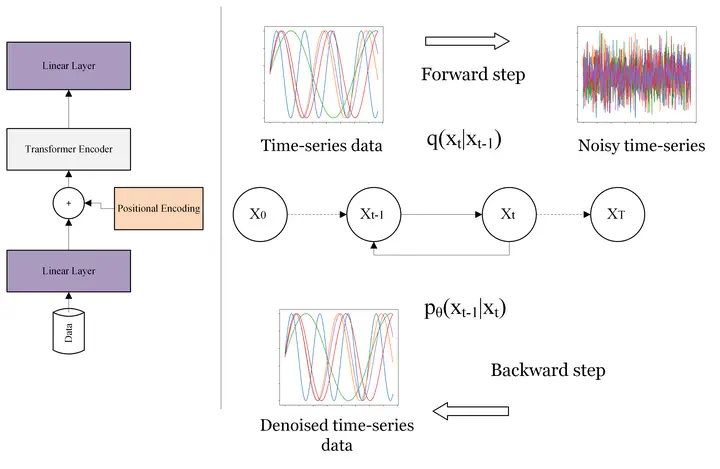

The generation of high-quality, long-sequenced time-series data is essential due to its wide range of applications. In the past, standalone Recurrent and Convolutional Neural Network-based Generative Adversarial Networks (GAN) were used to synthesize time-series data. However, they are inadequate for generating long sequences of time-series data due to limitations in the architecture, such as difficulties in capturing long-range dependencies, limited temporal coherence, and scalability challenges. Furthermore, GANs are well known for their training instability and mode collapse problem. To address this, we propose TransFusion, a diffusion, and transformers-based generative model to generate high-quality long-sequence time-series data. We extended the sequence length to 384, surpassing the previous limit, and successfully generated high-quality synthetic data. Also, we introduce two evaluation metrics to evaluate the quality of the synthetic data as well as its predictive characteristics. TransFusion is evaluated using a diverse set of visual and empirical metrics, consistently outperforming the previous state-of-the-art by a significant margin.