Ph.D. Student

Md Fahim Sikder is a researcher specializing in algorithmic fairness and generative modeling. He completed his Ph.D from Reasoning and Learning Lab (ReaL), IDA, Linköping University, Sweden, where his research focused on developing generative models and fair representation learning techniques that address intersectional bias in AI decision-making systems. Before pursuing his doctorate, Fahim served as a Lecturer in the Computer Science and Engineering department at the Institute of Science, Trade, and Technology (ISTT). He also took on the roles of Coordinator of the HEAP Programming Club and Coach of the ACM ICPC team at ISTT.

Experience

-

Ph.D. Student

Linköping UniversityDepartment of Computer and Information Science (IDA)

Responsibilities include:

- Research

- Supervising Master’s Thesis

- Conducting Labs

- Chair, IDA PhD Council (Board: 2024/25, 2025/26)

- Presidium Member, Linköping University PhD Student Network (LiU PhD) (Board: 2024/25)

- Department Representative (IDA), Linköping University PhD Student Network (LiU PhD) (Board: 2023/24)

-

Lecturer

Institute of Science Trade & Technology (ISTT)Department of Computer Science & Engineering (CSE)

Responsibilities include:

- Conducting Class & Labs

- Research

- Supervising Research Students

Education

-

Ph.D. in Computer Science

Linköping University, SwedenThesis on Representative Synthetic Data for Fair Decision Making. -

MS in Computer Science

Jahangirnagar University, Bangladesh -

BS (Eng.) in Computer Science & Engineering

Gopalganj Science and Technology University, Bangladesh (Formerly - Bangabandhu Sheikh Mujibur Rahman Science & Technology University)

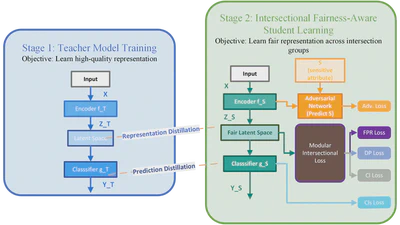

Promoting Intersectional Fairness through Knowledge Distillation